Hydrological models and model evaluation

21 important questions on Hydrological models and model evaluation

What is the difference between plotting Q on a log scale vs a normal scale in a hydrograph? How can you use this to differentiatie between quickflow and baseflow?

Log is non-linear transformation.

Drawing linear line/inflection point, from this point, all Q is assumed to originate from (slow) linear GW reservoir.

Plot baseflow separation line.

Everything that behaves more non-linear than that (above this line), should be fast non-linear runoff processes.

All flow above this line is quickflow (=fast nonlinear runoff processes).

Baseflow: maybe GW, should behave like linear reservoir, so should decay exponentially in time, so plotting on log scale it should be straight.

Why are hydro models generally poor when predicting extremes? Why is this a problem?

Bc those are conditions that cause damage and are scientifically interesting.

Models have different levels. Assumptions/preconceptions/theories/physical laws can already be considered models. They are a form of ... Model? And what could they grow into?

- Higher grades + faster learning

- Never study anything twice

- 100% sure, 100% understanding

What is the main difference between conceptual and physically-based model and what do they have in common?

Physically-based model have differential equations, which does not make them necessarily better.

They both solve the same mass balance, and are in that sense both equally physical.

We'll mainly use conceptual models - lumped version.

Name the 5 steps of model development

2. Numerical implementation: details very important for model outcome

3. Code verification: does your model reflect concepts you had in mind? During step 2 your model concept might change.

4. Model parameter calibration or optimization: optimize your parameters (based on training dataset)

5. Model output validation: what's the value of your model outcome (rest of splitted dataset used for validation)

With which factors can an optimum in model complexity be achieved?

- Predictive performance

- Data availability

- Model complexity

>Optimum: more complexity reduces predictive performance

Explain the difference between accurate and precise (and inaccurate and imprecise)? Use dartboards in your explanation. Which statistical concepts do they represent?

Variance = precision

What is Occam's razor (law of parsimony)?

Entities should not be multiplied without necessity.

When presented with competing hypotheses that make the same predictions, one should always select the solution with the fewest assumptions. Note: the razor is not meant to be a way of choosing between hypotheses that make different predictions(!).

Name 3 implications of Ockhams' razor?

- Determine how many assumptions and conditions are necessary for each explanation to be correct.

- If an explanation requires extra assumptions or conditions, demand evidence proportional to the strength of each claim.

- Extraordinary claims require extraordinary evidence.

Name the 3 inputs and two outputs of a model simulation

- model parameters/variables

- initial conditions (for all model state variables, typically have a differential equation behind them)

- Model/atmospheric forcing (e.g. P, T, RH)

Output:

- Model variables (e.g. Q, ET)

- State variables (e.g. S - left side differential eq)

What would leaf area index be in model input?

In many models, it would be an input, e.g. Parameter that varies per month and influences ET. Vegetation could also be a state variable (as the thing you want to predict), co-evolving with water storage.

How to quantify how well a model fits the data?

What is the difference between Nash-Sutcliffe and Coefficient of determination/R^2?

Nash-Sutfcliffe goes from - infinity to +1 in same equation

If you use R^2 you do that on a linear fit (so you've already optimized the fit, the fit can never be worse than optimal/average?)

Min 70!!

Explain the drawbacks of NSE, based on slide 23 and 25 (paper by Schaefli and X)

25: Seasonality - maybe not using the mean as benchmark anymore due to seasonality. Nash values would take only average over the whole year.

What do Schaefli and Gupta suggest to improve the precipiration-based benchmark efficiency? Explain this in 4 steps

4 steps as zero hypothesis

What is KGE and how does it differ from NSE?

How can NSE be deconstructed?

Smt with unequal weighing

Name and summarize the 3 issues with NSE and their current solutions.

2. Seasonality - solution suggested by Gupta and Schaefli(?)

3. Unequal weighing of bias/variability and correlation - KGE proposes equal weighing.

What's a constrain of modelling all non-linear flow as runoff processes in a hydrograph, as is often done in models?

What happens when you do a log transform on a hydrograph (Q-axis)? Give a mathematical example of a non-linear process.

A non-linear process would be exponential decay.

How do accuracy (bias) and precision (var) behave in relation to model complexity and why? How can this be practically used?

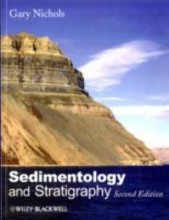

The question on the page originate from the summary of the following study material:

- A unique study and practice tool

- Never study anything twice again

- Get the grades you hope for

- 100% sure, 100% understanding